Creating a Demo Movie

January 17th, 2019

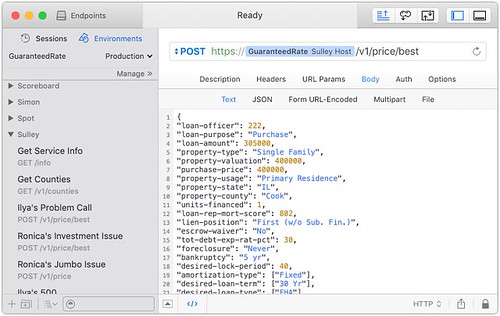

This week has been a Hackathon at The Shop, and I was asked to do one on a cross-domain, secure, component-based Web App Framework based on the work done at PayPal in the kraken.js team. It was a steep learning curve for me and the others on the Hackathon team, as none of us had any real experience with Node.js or React, but we had only this week to get something going.

The good news is that we got everything we needed to get running late yesterday, and today I started work on the Demo presentation which happens tomorrow, but it's videos, each group submitting one video. The only limitation is that the video has to be less than 5 min in length - and that's a hard limit I'm told.

OK... so I was looking at all the screen capture tools on the App Store, and some of them looked pretty decent, but the good one was $250, and while I might go that high - I wanted it to be amazing for $250... like OmniGraffle good. And I saw a lot of really iffy reviews. So that didn't seem like the way to go.

Due to the fact that I needed to be able to add in slides and screen grabs, I knew I needed more than a simple "start recording" that Google Hangouts does, and with nothing really obvious in the App Store or in Google searches... well... I hit up a few of my friends in production of stuff like this. Funny thing... they said "QuickTime Player and iMovie"

This really blew me away... I mean I knew about iMovie, but I didn't know that QuickTime Player did screen recordings - and with a selectable region on the screen. And that it also did auto recordings - again, I'm going to need to be able to do voice-overs on the slides and things happening on the screen in the demo.

So I started recording clips. Keynote was nice in that I could make all the slides there, and export them as JPEG files, and they imported perfectly into iMovie. Then I could put them in the timeline for exactly how long I needed them, and do any transitions I needed to make it look nice.

Then I went into a little phone booth we have at The Shop, and recorded the audio with very little background noise. I could then re-record the audio clips as needed to make it all fit in the 5 min hard limit. In the end, I could export the final movie, and upload it to the Google Drive for the submissions.

Don't get me wrong... there was a steep learning curve for iMovie for about an hour. How to move, select, add things, remove things... not obvious, but with a little searching and experimenting, I got the hang of the essentials. And honestly, that's all I needed to finish this Demo video.

I was totally blown away in the end. I was able to really put something nice together with a minimum of fuss, and now that I have the essentials in-hand, it'll be far easier next time. Just amazingly powerful tools from Apple - all installed on each new Mac. If only more people knew...