Storm Doesn’t Always Guarantee a Good Balance

Tuesday, November 4th, 2014

I trust the developers of Storm way too much. Maybe the truth of the matter is that I have assumptions about how I'd have developed certain aspects of Storm, and they seem perfectly logical to me. When those don't turn out to be the way it's really done in Storm, then I feel like I trust the Storm developers too much. Case in point: Balance.

I had a spout with a parallelization hint of 20. I had a topology with 20 workers. Right away, I'm thinking that no matter how the workers are arranged on the physical hardware of the cluster, there should be one - and only one - spout per worker. That way we have balanced the work load, and assuming that there are sufficient resources on each physical box for the configured number of workers, then we have the start of a nicely balanced topology.

Sadly, that assumption was wrong, and determining the truth took me several hours.

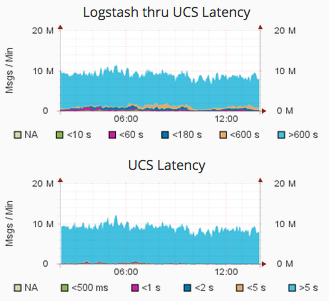

I was looking for problems in the topology related to the latency of it - lots of high capacity numbers in the UI. So I started digging into the spouts as well as the bolts, and what I aw in three of my four topologies was that there were six - out of twenty - spout instance that had zero emitted tuples. Not a one.

I looked at the logs, and they were grabbing messages from Kafka... updating the zookeeper checkpoints... just what it should do. I was worried that kafka was messed up, so I restart two of the nodes that seemed to have problems. No difference.

Hours I sent trying to figure this out.

And then I decided to just write down all the machine/partition data for each of the spouts and see if there was something like a missing partition somewhere. And as I wrote them all down I saw there were twenty of them - just like there should be... and yet the spouts reporting zeros had the same machine/partition configurations as another spout that was returning data.

Storm had doubled-up some of the spouts, and left others empty, and done so in a very repeatable way. In a sense, I was pretty upset - why log that you're reading messages if you're really "offline"? Why don't you distribute the spouts evenly? But in the end, I was just glad that things were working. But boy... it was a stressful hour.