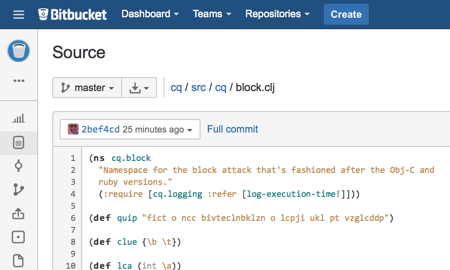

Another Boost in the CryptoQuip Performance (cont.)

Friday, December 19th, 2014

I got another email from my friend on the updates I'd made to the solver, and he pointed out that the pattern function we were using:

(defn pattern "Function to take a word (as a string) and return a vector that is the pattern of that word where the values are the index of the character. => (pattern \"see\") (0 1 1) => (pattern \"rabbit\") (0 1 2 2 3 4) " [word] (let [dw (distinct word)] (map #(.indexOf dw %) word)))

does something that's not strictly necessary to the proper functioning of the algorithm - the distinct call on the input word.

If we have a function that maps these words into some kind pattern, then it really doesn't matter what the pattern is - as long as it's just the pattern, and is not able to be confused with another pattern. So if we choose to change the code to look something like this:

(defn pattern "Function to take a word (as a string) and return a vector that is the pattern of that word where the values are the index of the character. => (pattern \"see\") (0 1 1) => (pattern \"rabbit\") (0 1 2 2 4 5) " [word] (map #(.indexOf word (int %)) word))

where we are ignoring the cost of the repeated characters in the word in terms of the index they represent, then we have a similar pattern that's deterministic and comparable, but it lacks the call to distinct, and so it's going to be a little faster.

In the REPL, we can see the old way:

cq.main=> (time (dotimes [_ 100000] (dpat "bivteclnbklzn"))) "Elapsed time: 8.748 msecs" nil cq.main=> (time (dotimes [_ 100000] (dpat "bivteclnbklzn"))) "Elapsed time: 8.158 msecs" nil

or about 87 nsec/call. That's fast. But when we test the new non-distinct mapper, we get:

cq.main=> (time (dotimes [_ 100000] (pattern "bivteclnbklzn"))) "Elapsed time: 4.859 msecs" nil cq.main=> (time (dotimes [_ 100000] (pattern "bivteclnbklzn"))) "Elapsed time: 4.972 msecs" nil

or down to about 49 nsec/call. That's another significant percentage on that call, but I doubt it'll convert to something really significant in the overall solution. It's down to about 5 to 6 msec per benchmark solution. Not bad, considering it's all on a laptop, and all on the JVM. I do love this game!